Why your agents suck and the need for ontology

Mitchell Bregman

Nov 13, 2025

I wanted to share some thoughts that have been bouncing around in my head lately. This whole “agents this, agents that, agents, agents, agents” wave is pretty ridiculous - not in the sense of their potential, but in how few people actually know what they’re doing. I’m not going to sit here and tell you I’ve got it all figured out (or that I even know what I’m doing, lol), but I will tell you that the foundation most of these agent frameworks and “AI for X” products are built on is wrong. We’re missing a critical layer.

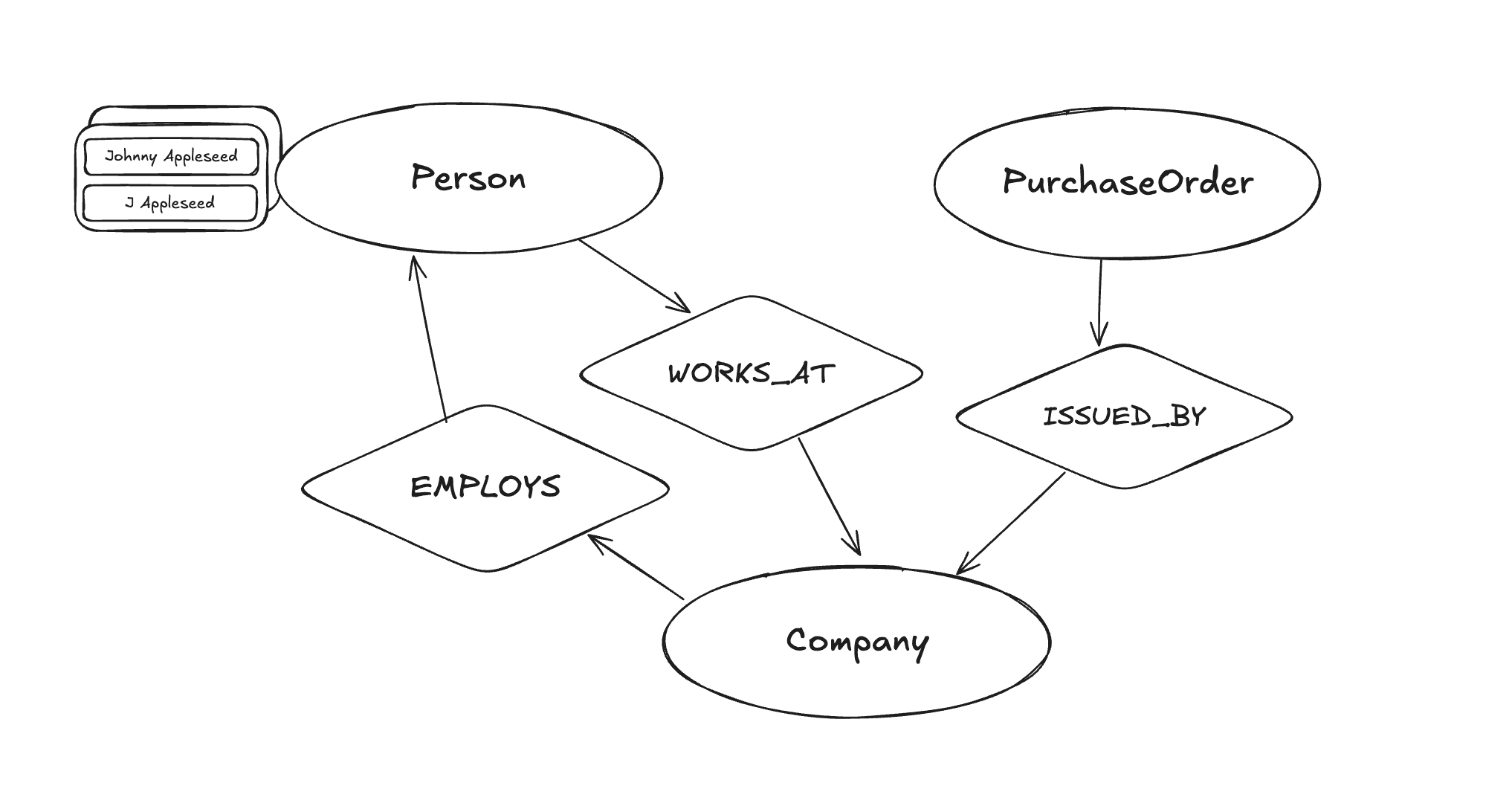

Everyone's obsessed with giving agents memory, tools, retrieval, long-term context. But none of that matters if the agents don’t understand the world they operate in. They “act” like they grasp reality, but they don't really know what a “company” is, they’ll swear a “purchase order” is just a PDF with numbers, or treat “Johnny Appleseed” and “J Appleseed” as unrelated strangers. At the end of the day, they’re not “thinking,” they’re just predicting the next likely token and hoping it sounds smart. We’re trying to teach models to reason on top of frameworks that were only ever meant to move and manipulate data, not make sense of it.

What we need is a world model of the “universe” we are operating in - a shared semantic language of meaning that turns raw data into something machines can actually comprehend and traverse.

Ontology

Ok, yes it sounds complex and overly academic. I’m not saying it isn’t, but I’m also not talking about the philosophical kind buried in dusty research papers. I mean something practical. A living, evolving map of what exists, how those things connect, and what they mean. Ontology gives your agents a shared language. A unified model of reality they can read from, write to, and reason over. It’s what turns “data” into “understanding.” Instead of hallucinating structure, they operate within one.

And during the process of defining this ontology - this master map - you end up with an incredibly rich and powerful knowledge graph for your entire universe. This graph has nodes that represent the key entities (or primitives) in your world - people, companies, products, transactions, events - and edges (or connections) that define how they’re related.

Each node becomes a living object. It doesn’t just store static attributes; it carries context, history, and meaning. It can answer questions like who changed, when, and why. It’s where every new fact, observation, or inference from your agents gets written back… not as raw text, but as structured truth.

When one agent reads an email and recognizes that “Johnny Appleseed joined Acme,” it adds that relationship to the graph. When another agent later sees “Acme signed a new contract,” it doesn’t start from scratch - it already understands who Johnny is, where he works, and how that event fits into the bigger picture. That’s when agents stop being disconnected performers and start behaving like a system - reading and writing to the same shared model of the world. The ontology becomes the backbone of reasoning, the memory that doesn’t fade, the ground truth they can all build on.

It’s important to note that ontology alone doesn’t make an agent intelligent. That’s certainly not the case I’m making. Ontology gives the agent a map of the world, but a map isn’t the same as navigation. Without some level of statefulness - the ability to remember where it’s been, what it’s seen, and what it already knows - the agent is still just exploring the graph blind, re-discovering the same ground over and over. The ontology provides structure; memory gives it continuity. You need both for real reasoning. And this is where ontology fundamentally diverges from the flat vector spaces we’re all used to today.

RAG today is basically: “Find similar chunks of text and hope the answer is in there.”

An ontology-based system says: “I already know what entities exist, how they relate, what changed, and who said it. Ask me something and I can answer with traceable facts - not vibes.”

That’s the shift - from retrieval to reasoning, from semantic proximity to semantic truth. My view is that data engineering will focus on exactly this over the next 5 years.

© 2025 Intergalactic Data Labs, Inc.